CASE STUDY OF MR/AR PROJECT FOR GRATXRAY

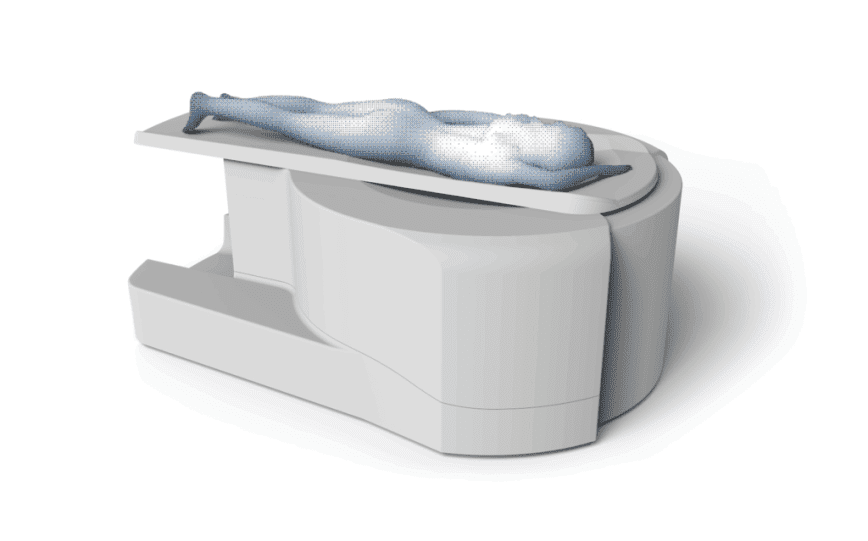

GratXray needed a reliable way to demonstrate its next-generation breast CT scanner without transporting hardware to hospitals and medical conferences. The goal was simple and critical: let doctors see the device in full size, understand its motion paths, and evaluate clinical workflow — without guessing, lag, or visual gimmicks. We built a Mixed Reality solution for Meta Quest 3 and a synchronized companion tablet app so clinicians can walk around the system, observe real-world occlusion, and watch CT and tomosynthesis animations triggered in real time by a presenter. The system had to work offline, run at 90–120 FPS, hold pose accuracy across multiple headsets, and operate in kiosk-safe environments. Result: a stable, hospital-ready MR platform that communicates GratXray’s technology clearly and reduces travel and setup effort for physical devices.

Executive Summary

We built a Mixed Reality application for Meta Quest 3 with AR support that allows GratXray to accurately place and demonstrate a floor‑anchored breast CT system at hospitals and congresses. Observers can freely walk around the virtual device in passthrough MR without interacting, while a presenter uses a computer to trigger predefined animations and stream a headset’s view. The solution supports multi‑headset co‑location, kiosk mode, offline operation, and sustained performance at 90–120 FPS—without cables.

Client

GratXray AG — Dr. Simon Spindler

Rütistrasse 14, CH‑8952 Schlieren, Switzerland

Keep contact details within confidential circulation.

Objectives

- Accurate floor‑constrained placement of the breast CT anywhere in a room.

- High‑fidelity passthrough visualization; observers can walk around freely.

- External computer triggers predefined animations; presenter can view headset cast.

- Multi‑user co‑location so all viewers see one device in one place with synchronized motion.

- Hands‑off observer experience; kiosk mode prevents accidental exits.

Key Constraints

- No cables during use; app continues when headsets are donned/doffed.

- Stable 90–120 FPS; ≥ 60 minutes continuous runtime.

- Model budget ≤ 200,000 triangles for the CT asset.

- No guardian boundary; safe free‑movement visualization.

- Operable via local router without internet access.

Key Technical Challenges & Our Solutions

Critical engineering problems solved for medical‑grade demonstration reliability.

Meta Quest Sync — No Internet

Challenge: Keep 1–5 Quest headsets perfectly synced without cloud or WAN.

- Local router only — no external servers

- Shared anchor resolution + deterministic state machine

- UDP multicast + keyframe confirmations

- Auto resync on headset wake / join

Solution: Local timebase + anchor exchange + timeline sync.

Human Occlusion & Depth Accuracy

Challenge: Virtual CT must hide behind real people + objects in MR.

- Quest depth API + confidence masks

- Stencil + depth pre‑pass for clean edges

- Dynamic fallback to silhouette occlusion

Solution: Real‑time depth‑aware compositing pipeline.

AR ↔ MR Shared Pose System

Challenge: Tablet AR and Quest MR see the device in the same spot.

- Shared anchor framework

- Pose gateway + smoothing filter

- Universal animation events + timestamps

Solution: Common world anchor sync + cross‑device pose fusion.

Performance at Medical Demo Quality

Challenge: 90–120 FPS in headset + casting + sync.

- URP mobile tuning + GPU instancing

- 200k tri limit, LODs, baked materials

- Network ticks decoupled from render

- Adaptive resolution + v‑sync policies

Solution: Render budget discipline + asynchronous network layer.

Impact & Results

Clinical clarity, exhibition reliability, and future‑proof asset pipeline.

Clinical Impact

- Doctors see true scale + motion paths

- Hands‑free guided viewing for clinicians

- Occlusion ensures realism + trust

Operational Impact

- Offline‑safe for conferences + hospitals

- Kiosk mode stops accidental exits

- Future CT revisions plug‑and‑play

CLIENT REVIEWS What People Are Saying About Us

Trusted by top platforms like Clutch, GoodFirms, Trustpilot, and Sortlist.