CASE STUDY OF MR/AR PROJECT FOR GRATXRAY

GratXray needed a reliable way to demonstrate its next-generation breast CT scanner without transporting hardware to hospitals and medical conferences. The goal was simple and critical: let doctors see the device in full size, understand its motion paths, and evaluate clinical workflow — without guessing, lag, or visual gimmicks. We built a Mixed Reality solution for Meta Quest 3 and a synchronized companion tablet app so clinicians can walk around the system, observe real-world occlusion, and watch CT and tomosynthesis animations triggered in real time by a presenter. The system had to work offline, run at 90–120 FPS, hold pose accuracy across multiple headsets, and operate in kiosk-safe environments. Result: a stable, hospital-ready MR platform that communicates GratXray’s technology clearly and reduces travel and setup effort for physical devices.

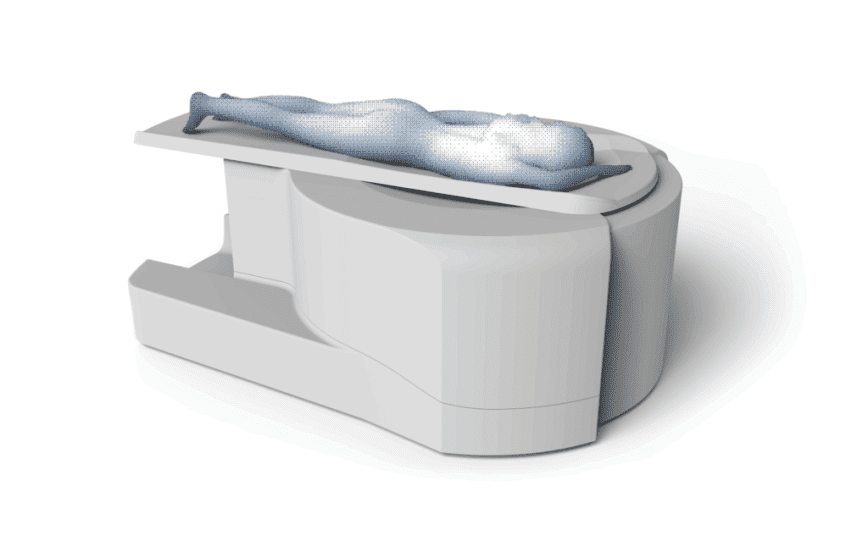

Replace with a high‑resolution hero visual (recommended 2000×900 JPG) or a poster frame for an embedded video.

Executive Summary

We built a Mixed Reality application for Meta Quest 3 that allows GratXray to accurately place and demonstrate a floor‑anchored breast CT system at hospitals and congresses. Observers can freely walk around the virtual device in passthrough MR without interacting, while a presenter uses a computer to trigger predefined animations and stream a headset’s view. The solution supports multi‑headset co‑location, kiosk mode, offline operation, and sustained performance at 90–120 FPS—without cables.

Client

GratXray AG — Dr. Simon Spindler

Rütistrasse 14, CH‑8952 Schlieren, Switzerland

Keep contact details within confidential circulation.

Objectives

- Accurate floor‑constrained placement of the breast CT anywhere in a room.

- High‑fidelity passthrough visualization; observers can walk around freely.

- External computer triggers predefined animations; presenter can view headset cast.

- Multi‑user co‑location so all viewers see one device in one place with synchronized motion.

- Hands‑off observer experience; kiosk mode prevents accidental exits.

Key Constraints

- No cables during use; app continues when headsets are donned/doffed.

- Stable 90–120 FPS; ≥ 60 minutes continuous runtime.

- Model budget ≤ 200,000 triangles for the CT asset.

- No guardian boundary; safe free‑movement visualization.

- Operable via local router without internet access.

Solution Overview

MR App for Meta Quest 3

- Passthrough MR: Cable‑free visualization via color passthrough.

- Precise Placement: Floor‑anchored placement tool; pose locked after setup.

- Occlusion: Depth‑aware occlusion by people/objects where supported.

- Kiosk Mode: Prevent exit to system UI; ArborXR integration optional.

- Lifecycle: Auto‑reconnect when headsets are put on/off; session persists.

External Computer Control & Streaming

- Trigger Panel: Start/stop predefined animations from a PC or laptop.

- Casting: Stream one headset’s view (including passthrough) to the computer.

- Placement Control: Finalize placement from PC or within the headset.

Multi‑User Co‑location

- Shared Anchors: All headsets resolve the same world anchor.

- Synchronized Animations: PC triggers update all connected headsets simultaneously.

- Scale: Typical setups support 1–5 headsets.

Predefined Animations

Parameterised motion along well‑defined axes to mirror CT and tomosynthesis operations.

- Height up/down

- Gantry rotation (clockwise / counter‑clockwise)

- Detector displacement (back / forward)

- Paddle displacement (back / forward)

- Tomosynthesis arm rotation (clock / counter‑clock)

- CT acquisition sequence

- Tomosynthesis acquisition sequence

Asset & Import Rules

- ≤ 200k triangles; LOD‑ready; mobile‑optimized PBR textures.

- Documented axis conventions, pivots, and hierarchy for repeatable import.

- Deterministic animation curves with parameter ranges per mechanism.

A short asset guide details structure, axes, and pivots so newer versions import without code changes.

Tablet Companion App (iOS / Android)

Mode 1 — Virtual Display (Standalone)

- Touch gestures to inspect the CT model.

- Side buttons trigger: height, gantry, detector, paddle, tomo arm, CT, tomo.

Mode 2 — Augmented Display (Standalone AR)

- Place the CT in a room via AR to view from all sides.

- On‑screen buttons for animations; reset/re‑place in settings.

Mode 3 — Combined AR (Synchronized)

- Connects to the MR session; admin PC triggers mirror on tablet AR.

- Placement sourced from Mode 2 or the MR app’s anchor.

Runs in guided access / lock‑task to prevent exit during demos.

Demo Workflow

- Setup: Place CT on the floor (via headset or PC); confirm orientation and scale.

- Join: Additional headsets auto‑resolve the shared anchor; kiosk mode active.

- Present: Start animations from PC; cast one headset’s view to the screen.

- Wrap: Session persists across doff/don; no cables required.

Performance & Stability

| Metric | Target | Status |

|---|---|---|

| Frame rate | 90–120 FPS | Meets |

| Continuous runtime | ≥ 60 minutes | Meets |

| Connectivity | Local router, no internet | Supported |

| Casting FPS | Lower than headset | Acceptable |

Architecture

- Unity XR (OpenXR) targeting Meta Quest 3.

- Local network session for headset sync and PC control.

- Shared anchors for co‑location; state replication for synchronized animations.

- One headset cast to PC for presenter guidance.

Tech Stack

- Unity, OpenXR, Meta XR SDK

- URP mobile rendering, GPU instancing

- Optional device management: ArborXR

- Lock‑task / kiosk via platform‑level controls

Hardware & Network

- 1–5 × Meta Quest 3 headsets (fully charged; cable‑free in operation).

- Presenter laptop/PC (animation panel + casting).

- Local Wi‑Fi 6/6E router recommended; offline operation supported.

Operations & Control

- Headsets remain connected when taken off; auto‑reconnect on wear.

- Full source code delivery for future modifications.

- Asset import rules documented for new CT revisions.

Timeline

- 24.10.2025 — Beta version for testing.

- 14.11.2025 — Final product delivery.

Deliverables

- MR app (Quest 3), internal distribution only.

- External PC interface (animation triggers + casting).

- Tablet app (iOS/Android) with three modes.

- Kiosk mode configuration (ArborXR optional).

- Source code and setup/usage documentation.

Results

- Consistent, accurate in‑room placement across devices.

- Presenter‑led demos with synchronized multi‑viewer experience.

- Reduced congress setup overhead; fully offline capable.

Why It Matters

- Explains CT and tomosynthesis motion paths clearly to clinicians.

- Avoids physical device logistics for early‑stage demos.

- Reusable asset pipeline for future CT revisions.

Confidentiality Notice

This case study is confidential and intended solely for GratXray AG and its designated partners and contractors. Do not publish or circulate outside approved recipients. All trademarks and product names are the property of their respective owners.

© 2025 — Internal Case Study · File: GRX‑MR‑AR‑CS · Status: Confidential

Key Technical Challenges & Our Solutions

Critical engineering problems solved for medical‑grade demonstration reliability.

Meta Quest Sync — No Internet

Challenge: Keep 1–5 Quest headsets perfectly synced without cloud or WAN.

- Local router only — no external servers

- Shared anchor resolution + deterministic state machine

- UDP multicast + keyframe confirmations

- Auto resync on headset wake / join

Solution: Local timebase + anchor exchange + timeline sync.

Human Occlusion & Depth Accuracy

Challenge: Virtual CT must hide behind real people + objects in MR.

- Quest depth API + confidence masks

- Stencil + depth pre‑pass for clean edges

- Dynamic fallback to silhouette occlusion

Solution: Real‑time depth‑aware compositing pipeline.

AR ↔ MR Shared Pose System

Challenge: Tablet AR and Quest MR see the device in the same spot.

- Shared anchor framework

- Pose gateway + smoothing filter

- Universal animation events + timestamps

Solution: Common world anchor sync + cross‑device pose fusion.

Performance at Medical Demo Quality

Challenge: 90–120 FPS in headset + casting + sync.

- URP mobile tuning + GPU instancing

- 200k tri limit, LODs, baked materials

- Network ticks decoupled from render

- Adaptive resolution + v‑sync policies

Solution: Render budget discipline + asynchronous network layer.

Impact & Results

Clinical clarity, exhibition reliability, and future‑proof asset pipeline.

Clinical Impact

- Doctors see true scale + motion paths

- Hands‑free guided viewing for clinicians

- Occlusion ensures realism + trust

Operational Impact

- Offline‑safe for conferences + hospitals

- Kiosk mode stops accidental exits

- Future CT revisions plug‑and‑play

CLIENT REVIEWS What People Are Saying About Us

Trusted by top platforms like Clutch, GoodFirms, Trustpilot, and Sortlist.